Seeing through obstructions with diffractive cloaking

Abstract

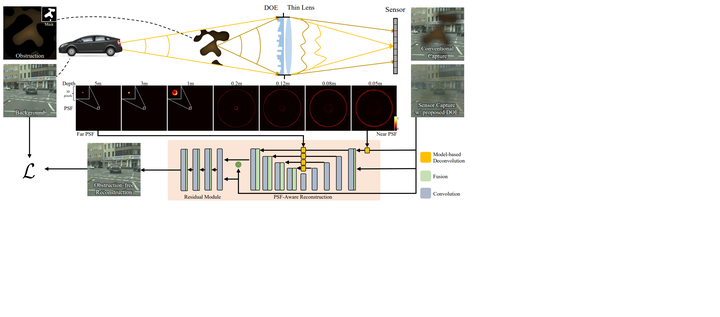

Unwanted camera obstruction can severely degrade captured images, including both scene occluders near the camera and partial occlusions of the camera cover glass. Such occlusions can cause catastrophic failures for various scene understanding tasks such as semantic segmentation, object detection, and depth estimation. Existing camera arrays capture multiple redundant views of a scene to see around thin occlusions. Such multi-camera systems effectively form a large synthetic aperture, which can suppress nearby occluders with a large defocus blur, but significantly increase the overall form factor of the imaging setup. In this work, we propose a monocular single-shot imaging approach that optically cloaks obstructions by emulating a large array. Instead of relying on different camera views, we learn a diffractive optical element (DOE) that performs depth-dependent optical encoding, scattering nearby occlusions while allowing paraxial wavefronts to be focused. We computationally reconstruct unobstructed images from these superposed measurements with a neural network that is trained jointly with the optical layer of the proposed imaging system. We assess the proposed method in simulation and with an experimental prototype, validating that the proposed computational camera is capable of recovering occluded scene information in the presence of severe camera obstruction.