Mask-ToF: Learning Microlens Masks for Flying Pixel Correction in Time-of-Flight Imaging

Abstract

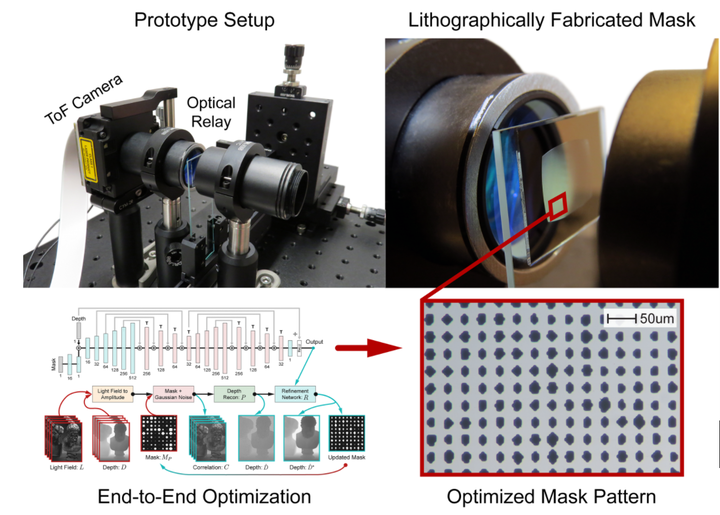

Flying pixels (FPs) are pervasive artifacts that occur at object boundaries, where background and foreground light mix to produce erroneous measurements, that can negatively impact downstream 3D vision tasks. Mask-ToF, with the help of a differentiable time-of-flight simulator, learns a microlens-level occlusion mask pattern which modulates the selection of foreground and background light on a per-pixel basis. When trained in an end-to-end fashion with a depth refinement network, Mask-ToF is able to effectively decode these modulated measurements to produce high fidelity depth reconstructions with significantly reduced flying pixel counts. We photolithographically manufacture the learned microlens mask, and validate our findings experimentally using a custom-designed optical relay system. As seen on the right, for real scenes Mask-ToF achieves significantly fewer flying pixels than a “naive” circular aperture approach, while maintaining high signal-to-noise ratio (SNR).